Researchers use quantum-inspired approach to increase lidar resolution

About

13 July 2022

Researchers use quantum-inspired approach to increase lidar resolution

By capturing more details, new approach could make lidar useful for facial recognition

WASHINGTON — Researchers have shown that a quantum-inspired technique can be used to perform lidar imaging with a much higher depth resolution than is possible with conventional approaches. Lidar, which uses laser pulses to acquire 3D information about a scene or object, is usually best suited for imaging large objects such as topographical features or built structures due to its limited depth resolution.

“Although lidar can be used to image the overall shape of a person, it typically doesn’t capture finer details such as facial features,” said research team leader Ashley Lyons from the University of Glasgow in the United Kingdom. “By adding extra depth resolution, our approach could capture enough detail to not only see facial features but even someone’s fingerprints.”

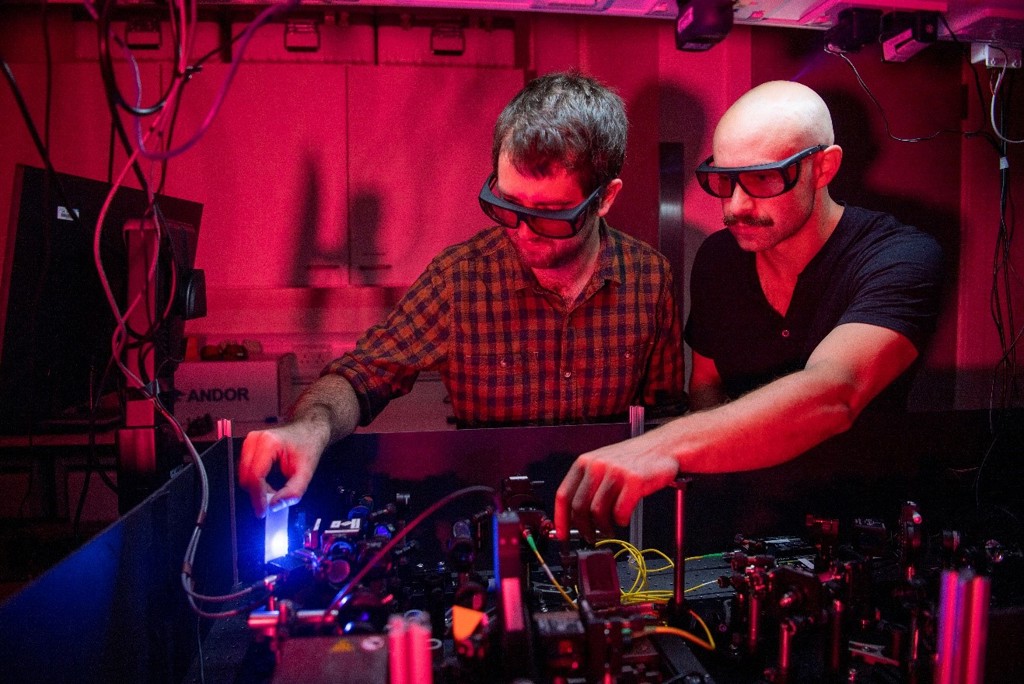

Caption: Researchers developed a quantum-inspired technique that can be used to perform lidar with a depth resolution that is much higher than conventional approaches.

Credit: Ashley Lyons, University of Glasgow

In the Optica Publishing Group journal Optics Express, Lyons and first author Robbie Murray describe the new technique, which they call imaging two-photon interference lidar. They show that it can distinguish reflective surfaces less than 2 millimeters apart and create high-resolution 3D images with micron-scale resolution.

“This work could lead to much higher resolution 3D imaging than is possible now, which could be useful for facial recognition and tracking applications that involve small features,” said Lyons. “For practical use, conventional lidar could be used to get a rough idea of where an object might be and then the object could be carefully measured with our method.”

Using classically entangled light

The new technique uses “quantum inspired” interferometry, which extracts information from the way that two light beams interfere with each other. Entangled pairs of photons – or quantum light – are often used for this type of interferometry, but approaches based on photon entanglement tend to perform poorly in situations with high levels of light loss, which is almost always the case for lidar. To overcome this problem, the researchers applied what they’ve learned from quantum sensing to classical (non-quantum) light.

“With quantum entangled photons, only so many photon pairs per unit time can be generated before the setup becomes very technically demanding,” said Lyons. “These problems don’t exist with classical light, and it is possible to get around the high losses by turning up the laser power.”

When two identical photons meet at a beam splitter at the same time they will always stick together, or become entangled, and leave in the same direction. Classical light shows the same behavior but to a lesser degree — most of the time classical photons go in the same direction. The researchers used this property of classical light to very precisely time the arrival of one photon by looking at when two photons simultaneously arrive at detectors.

Enhancing depth resolution

“The time information gives us the ability to perform depth ranging by sending one of those photons out onto the 3D scene and then timing how long it takes for that photon to come back,” said Lyons. “Thus, two-photon interference lidar works much like conventional lidar but allows us to more precisely time how long it takes for that photon to reach the detector, which directly translates into greater depth resolution.”

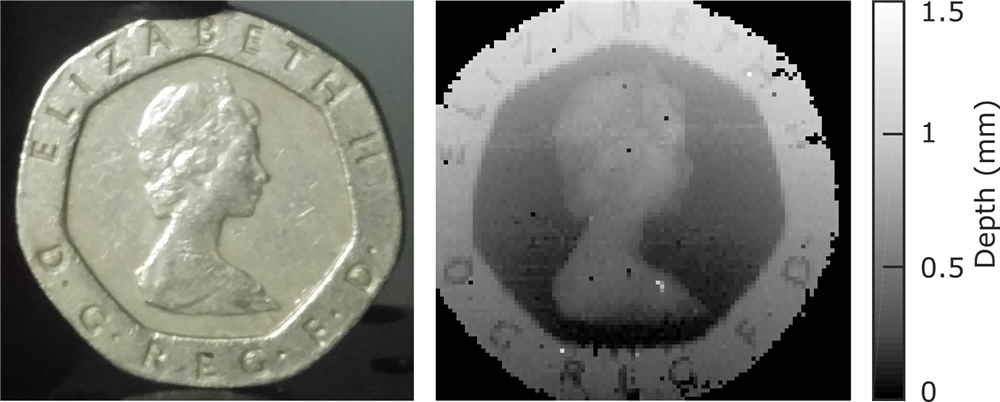

Caption: The researchers used their two-photon interference lidar method to create a detailed 3D map of a 20 pence coin.

Credit: Ashley Lyons, University of Glasgow

The researchers demonstrated the high depth resolution of two-photon interference lidar by using it to detect the two reflective surfaces of a piece of glass about 2 millimeters thick. Traditional lidar wouldn’t be able to distinguish these two surfaces, but the researchers were able to clearly measure the two surfaces. They also used the new method to create a detailed 3D map of a 20-pence coin with 7-micron depth resolution. This shows that the method could capture the level of detail necessary to differentiate key facial features or other differences between people.

Two-photon interference lidar also works very well at the single-photon level, which could enhance more complex imaging approaches used for non-line-of-sight imaging or imaging through highly scattering media.

Currently, acquiring the images takes a long time because it requires scanning across all three spatial dimensions. The researchers are working to make this process faster by reducing the amount of scanning necessary to acquire 3D information.

Paper: R. Murray, A. Lyons, “Two-Photon Interference LiDAR Imaging,” Opt. Express, 30, 15 (2022)

DOI: doi.org/10.1364/OE.461248

About Optica Publishing Group

Optica Publishing Group is a division of the society, Optica, Advancing Optics and Photonics Worldwide. It publishes the largest collection of peer-reviewed and most-cited content in optics and photonics, including 18 prestigious journals, the society’s flagship member magazine, and papers and videos from more than 835 conferences. With over 400,000 journal articles, conference papers and videos to search, discover and access, our publications portfolio represents the full range of research in the field from around the globe.

About Optics Express

Optics Express reports on scientific and technology innovations in all aspects of optics and photonics. The bi-weekly journal provides rapid publication of original, peer-reviewed papers. It is published by Optica Publishing Group and led by Editor-in-Chief James Leger of the University of Minnesota, USA. Optics Express is an open-access journal and is available at no cost to readers online. For more information, visit Optics Express.

Media Contact